Don’t be silly

or do be, that’s great too

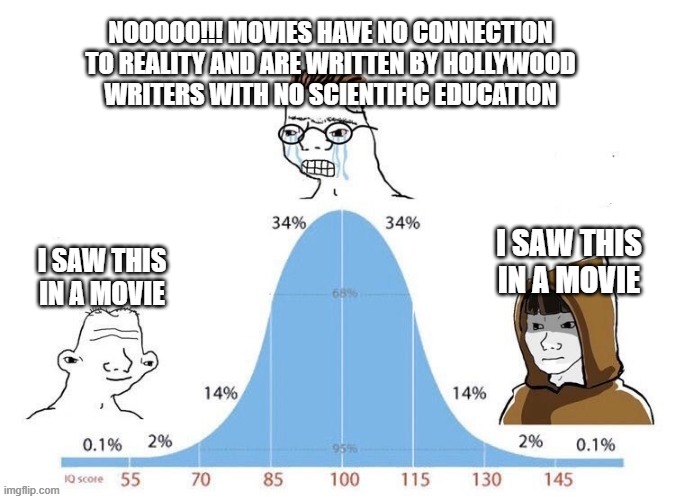

Serious people have been talking about human-level AI and superintelligence for nearly half a century. But mostly it’s been too silly to talk about. In computer science departments discussing it openly was implicitly banned. Those who did were often made into pariahs. Outside academia the public thought it was sci-fi.

Even 10 years ago, when AI began showing real promise, you instead had to say you worked on “ML” or “Machine Learning”. But researchers kept plodding on, slowly building machine intelligence, ever careful not to say out loud what they really thought they were building for fear of humanity’s harshest punishment: ridicule. Only in the last few years has the reality of AI become real enough that we’ve even allowed researchers to say what they do is “AI” without scoffing at them.

Now, here we are today, embedding AI into every part of our lives and work. Proof that silly things are sometimes real. Proof they sometimes reshape the world.

This may seem like a small thing, but it’s not. We shy away from talking seriously about serious things when we’re afraid it makes us look silly. And it has real consequences. That’s what happened with the discourse around AI risks:

Now that AI is near it’s becoming plain that sharing the world with another form of intelligence will have risks. Obviously, how could it not? But the risks from AI have been called out by the likes of Bostrom and Yudkowsky for decades now, largely ignored because it was too silly. And the real impact is that we’ve now failed to prepare for those risks. We’ve failed at even discussing them broadly as a society. Now we have little time left.

Need more social proof to start worrying?

Richard Sutton is the inventor of Reinforcement Learning and just won the Turing Award in 2024, the most prestigious award in computer science. He actively thinks that we should start planning for AI to replace us as a species, and is actively against trying to stop that.

Geoffrey Hinton is the 2018 Turing winner (also for his work in AI), often considered the grandfather of deep learning. He quit his job at Google paying him millions to do AI research and is now actively committing his life to warning people about the dangers.

Elon Musk co-founded OpenAI in 2015 in part because he believed AI was going to determine the future of humanity and he didn't trust Google (and Larry Page) to use it for good. After falling out with Sam Altman he went on to found another AI company and invested billions into it in order to become one of the major players at this new seat of power.

As of 2024 Donald Trump has begun talking about AI and its competitive landscape and technology needs.

The CEOs of the most successful AI teams in the world (Dario Amodei, Demis Hassabis, Sam Altman), all openly discuss that AI smarter than all humanity will arrive this decade and may kill us all. And if it doesn't kill us all, we still need to figure out how to make sure we don't kill each other with it, or accidentally empower a dictator.

Take a second to digest just how fucking serious this is. It's literally world defining. And then consider that AI researchers have been blaring the alarm since the 1960s, and we chose not to listen until the moment it's finally upon us. As IJ Good said back in 1965:

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an 'intelligence explosion,' and the intelligence of man would be left far behind... Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control. It is curious that this point is made so seldom outside of science fiction. It is sometimes worthwhile to take science fiction seriously.”

We ignored him and others for 60 years. And now it is upon us.

Excellent writers and policy wonks have been discussing AI risk for years. Finally, in just the last 12 months, the discussion around AI risk has started to be taken more seriously, although there’s a long way to go. But there’s another conversation that hasn’t yet even begun: how to evolve our democracy to be resilient to an AI future. By default, AI will upset the foundations our democracy is built on. Implicit guard rails and explicit checks and balances may no longer function well enough when our institutions are automated. The result may be a rapid slide into dictatorship.

This is not a distant theoretical. The pieces for a fully automated, AI powered world are coming together now, as we speak, and there are incredible pressures across our economy and society to accelerate and integrate them. And there are compelling reasons why slowing down may not be the answer, even if it were possible.

It’s time to stop feeling silly and start taking seriously the silly things in front of us that are deadly serious. However silly they are.

Creating AI is going to change every single aspect of our world. But the way it changes our world is not yet written in stone. We all together have a part to play in determining the outcome. But only if we get over ourselves and talk about these silly things.

We are creating machines smarter than us. They will have their own agency. We may not be able to keep control of them. Even if we do, they may be used by governments to gain unprecedented power over us.

These are serious fucking things. And they’re silly too. The last half century has coddled us into a false sense of stability. Whispered into our ear that silly things don’t happen on the world stage, not really. But the rules are changing. We don’t know what the new rules will be.

It’s OK to be silly. Now let’s talk about it.